Winutils Exe Hadoop S

This article offers a cross-section of what you’re going to find when it comes to floor plan software, but we’re sure there are some great options we missed. What did you last use when you designed a floor plan, and what did you think of it? Free software 3d home floor plan design. But what about you? I’m sure the Property Brothers would have liked to see a piece of software that can also help them.

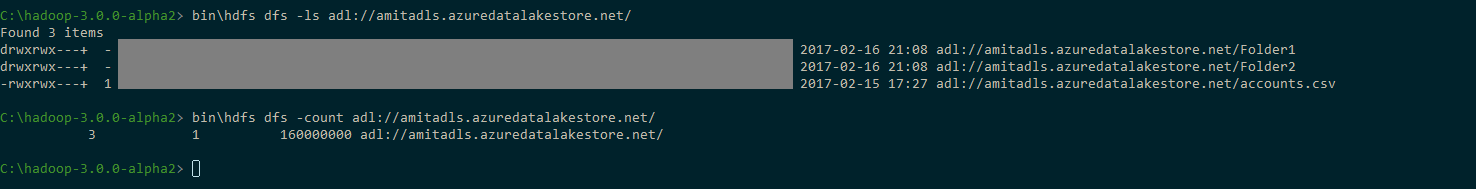

Windows binaries for Hadoop versions. These are built directly from the same git commit used to create the official ASF releases; they are checked out and built on a windows VM which is dedicated purely to testing Hadoop/YARN apps on Windows.

I am getting the following error while starting namenode for latest hadoop-2.2 release. I didn't find winutils exe file in hadoop bin folder. I tried below commands

15 Answers

Simple Solution :Download it from here and add to $HADOOP_HOME/bin

(Source :Click here)

EDIT:

For hadoop-2.6.0 you can download binaries from Titus Barik blog >>.

I have not only needed to point HADOOP_HOME to extracted directory [path], but also provide system property -Djava.library.path=[path]bin to load native libs (dll).

If we directly take the binary distribution of Apache Hadoop 2.2.0 release and try to run it on Microsoft Windows, then we'll encounter ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path.

The binary distribution of Apache Hadoop 2.2.0 release does not contain some windows native components (like winutils.exe, hadoop.dll etc). These are required (not optional) to run Hadoop on Windows.

So you need to build windows native binary distribution of hadoop from source codes following 'BUILD.txt' file located inside the source distribution of hadoop. You can follow the following posts as well for step by step guide with screen shot

If you face this problem when running a self-contained local application with Spark (i.e., after adding spark-assembly-x.x.x-hadoopx.x.x.jar or the Maven dependency to the project), a simpler solution would be to put winutils.exe (download from here) in 'C:winutilbin'. Then you can add winutils.exe to the hadoop home directory by adding the following line to the code:

Source: Click here

The statementjava.io.IOException: Could not locate executable nullbinwinutils.exe

explains that the null is received when expanding or replacing an Environment Variable. If you see the Source in Shell.Java in Common Package you will find that HADOOP_HOME variable is not getting set and you are receiving null in place of that and hence the error.

So, HADOOP_HOME needs to be set for this properly or the variable hadoop.home.dir property.

Hope this helps.

Thanks,Kamleshwar.

I just ran into this issue while working with Eclipse. In my case, I had the correct Hadoop version downloaded (hadoop-2.5.0-cdh5.3.0.tgz), I extracted the contents and placed it directly in my C drive. Then I went to

Eclipse->Debug/Run Configurations -> Environment (tab) -> and added

variable: HADOOP_HOME

Value: C:hadoop-2.5.0-cdh5.3.0

You can download winutils.exe here: http://public-repo-1.hortonworks.com/hdp-win-alpha/winutils.exe

Then copy it to your HADOOP_HOME/bin directory.

Download game f1 2010 untuk pc. winutils.exe are required for hadoop to perform hadoop related commands. please download hadoop-common-2.2.0 zip file. winutils.exe can be found in bin folder. Extract the zip file and copy it in the local hadoop/bin folder.

I was facing the same problem. Removing the bin from the HADOOP_HOME path solved it for me. The path for HADOOP_HOME variable should look something like.

System restart may be needed. In my case, restarting the IDE was sufficient.

Set up HADOOP_HOME variable in windows to resolve the problem.

You can find answer in org/apache/hadoop/hadoop-common/2.2.0/hadoop-common-2.2.0-sources.jar!/org/apache/hadoop/util/Shell.java :

IOException from

HADOOP_HOME_DIR from

Install Winutils

In Pyspark, to run local spark application using Pycharm use below lines

Winutils.exe Hadoop 2.7 Download

I was getting the same issue in windows. I fixed it by

- Downloading hadoop-common-2.2.0-bin-master from link.

- Create a user variable HADOOP_HOME in Environment variable and assign the path of hadoop-common bin directory as a value.

- You can verify it by running hadoop in cmd.

- Restart the IDE and Run it.

Download desired version of hadoop folder (Say if you are installing spark on Windows then hadoop version for which your spark is built for) from this link as zip.

Winutil

Extract the zip to desired directory.You need to have directory of the form hadoopbin (explicitly create such hadoopbin directory structure if you want) with bin containing all the files contained in bin folder of the downloaded hadoop. This will contain many files such as hdfs.dll, hadoop.dll etc. in addition to winutil.exe.

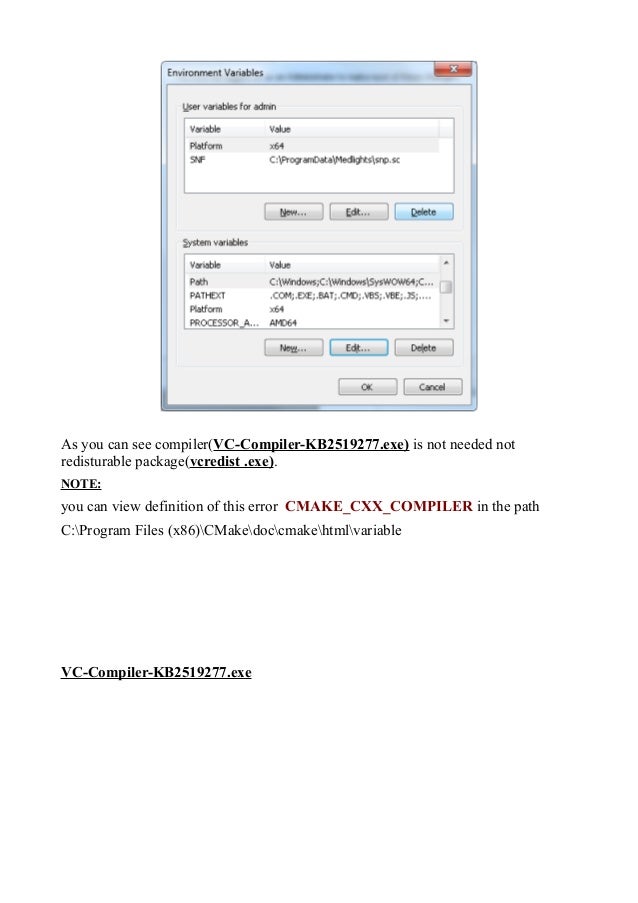

Now create environment variableHADOOP_HOME and set it to <path-to-hadoop-folder>hadoop. Then add;%HADOOP_HOME%bin; to PATH environment variable.

Open a 'new command prompt' and try rerunning your command.

- Download [winutils.exe]

From URL :

https://github.com/steveloughran/winutils/hadoop-version/bin - Past it under HADOOP_HOME/bin

Note : You should Set environmental variables:

User variable:

Variable: HADOOP_HOME

Value: Hadoop or spark dir

I used 'hbase-1.3.0' and 'hadoop-2.7.3' versions. Setting HADOOP_HOME environment variable and copying 'winutils.exe' file under HADOOP_HOME/bin folder solves the problem on a windows os.Attention to set HADOOP_HOME environment to the installation folder of hadoop(/bin folder is not necessary for these versions).Additionally I preferred using cross platform tool cygwin to settle linux os functionality (as possible as it can) because Hbase team recommend linux/unix env.

Winutils.exe is used for running the shell commands for SPARK. When you need to run the Spark without installing Hadoop, you need this file.

Steps are as follows:

Download the winutils.exe from following location for hadoop 2.7.1https://github.com/steveloughran/winutils/tree/master/hadoop-2.7.1/bin[NOTE: If you are using separate hadoop version then please download the winutils from corresponding hadoop version folder on GITHUB from the location as mentioned above.]

Now, create a folder 'winutils' in C: drive. Now create a folder 'bin' inside folder 'winutils' and copy the winutils.exe in that folder. So the location of winutils.exe will be C:winutilsbinwinutils.exe

Now, open environment variable and set HADOOP_HOME=C:winutil[NOTE: Please do not addbin in HADOOP_HOME and no need to set HADOOP_HOME in Path]

Your issue must be resolved !!

Not the answer you're looking for? Browse other questions tagged hadoop or ask your own question.

I'm curious! To my knowledge, HDFS needs datanode processes to run, and this is why it's only working on servers. Spark can run locally though, but needs winutils.exe which is a component of Hadoop. But what exactly does it do? How is it, that I cannot run Hadoop on Windows, but I can run Spark, which is built on Hadoop?

1 Answer

I know of at least one usage, it is for running shell commands on Windows OS. You can find it in org.apache.hadoop.util.Shell, other modules depends on this class and uses it's methods, for example getGetPermissionCommand() method: