Winutils Exe Hadoop S

Windows binaries for Hadoop versions

These are built directly from the same git commit used to create the official ASF releases; they are checked outand built on a windows VM which is dedicated purely to testing Hadoop/YARN apps on Windows. It is not a day-to-dayused system so is isolated from driveby/email security attacks.

IOException: Could not locate executable C: hadoop bin winutils. Exe in the Hadoop binaries. So just follow this article and at the end of the tutorial you will be able to get rid of these errors by building a Hadoop distribution.

Security: can you trust this release?

- I am the Hadoop committer ' stevel': I have nothing to gain by creating malicious versions of these binaries. If I wanted to run anything on your systems, I'd be able to add the code into Hadoop itself.

- I'm signing the releases.

- My keys are published on the ASF committer keylist under my username.

- The latest GPG key (E7E4 26DF 6228 1B63 D679 6A81 950C C3E0 32B7 9CA2) actually lives on a yubikey for physical security; the signing takes place there.

- The same pubikey key is used for 2FA to github, for uploading artifacts and making the release.

Someone malicious would need physical access to my office to sign artifacts under my name. If they could do that, they could commit malicious code into Hadoop itself, even signing those commits with the same GPG key. Though they'd need the pin number to unlock the key, which I have to type in whenever the laptop wakes up and I want to sign something. That'd take getting something malicious onto my machine, or sniffing the bluetooth packets from the keyboard to laptop. Were someone to get physical access to my machine, they could probably install a malicous version of git, one which modified code before the checkin. I don't actually my patches to verify that there's been no tampering, but we do tend to keep an eye on what our peers put in.

The other tactic would have been for a malicious yubikey to end up being delivered by Amazon to my house. I don't have any defences against anyone going to that level of effort.

2017-12 Update That key has been revoked, though it was never actually compromised. Lack of randomness in the prime number generator on the yubikey, hencean emergency cancel session. Not set things up properly again.

Note: Artifacts prior to Hadoop 2.8.0-RC3 [were signed with a different key](https://pgp.mit.edu/pks/lookup?op=vindex&search=0xA92454F9174786B4; again, on the ASF key list.

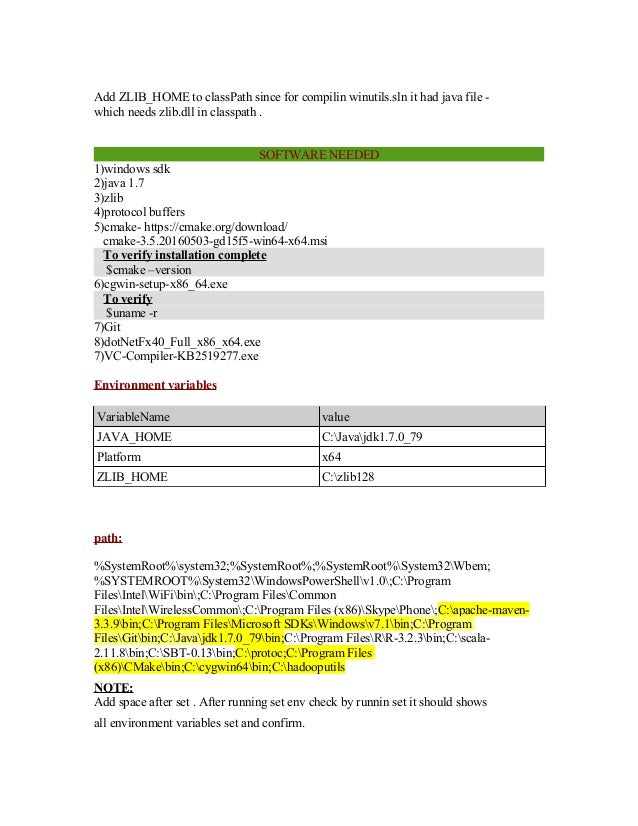

Build Process

A dedicated Windows Server 2012 VM is used for building and testing Hadoop stack artifacts. It is not used for anything else.

This uses a VS build setup from 2010; compiler and linker version: 16.00.30319.01 for x64

Maven 3.3.9 was used; signature checked to be that of Jason@maven.org. While my key list doesn't directly trust that signature, I do trust that of other signatorees:

Java 1.8:

release process

Windows VM

In hadoop-trunk

The version to build is checked out from the declared SHA1 checksum of the release/RC, hopefully moving to signed tags once signing becomes more common there.

The build was executed, relying on the fact that the native-win profile is automatic on Windows:

This creates a distribution, with the native binaries under hadoop-disttargethadoop-X.Y.Zbin

Create a zip file containing the contents of the winutils%VERSION%. This is done on the windows machine to avoid any risk of the windows line-ending files getting modified by git. This isn't committed to git, just copied over to the host VM via the mounted file share.

Host machine: Sign everything

Pull down the newly added files from github, then sign the binary ones and push the .asc signatures back.

There isn't a way to sign multiple files in gpg2 on the command line, so it's either write a loop in bash or just edit the line and let path completion simplify your life. Here's the list of sign commands:

verify the existence of files, then

Winutils Hadoop 2.7

Then go to the directory with the zip file and sign that file too

github, create the release

- Go to the github repository

- Verify the most recent commit is visible

- Tag the release with the hadoop version, include the commit checksum used to build off

- Drop in the .zip and .zip.asc files as binary artifacts

I am getting the following error while starting namenode for latest hadoop-2.2 release. I didn't find winutils exe file in hadoop bin folder. I tried below commands

15 Answers

Simple Solution :Download it from here and add to $HADOOP_HOME/bin

(Source :Click here)

It is completely non-technical and can recover most password types - irrespective of complexity. Pds excel password recovery serial. Passware Excel Key is a fast and easy to use solution to recover a lost excel password.

EDIT:

For hadoop-2.6.0 you can download binaries from Titus Barik blog >>.

I have not only needed to point HADOOP_HOME to extracted directory [path], but also provide system property -Djava.library.path=[path]bin to load native libs (dll).

If we directly take the binary distribution of Apache Hadoop 2.2.0 release and try to run it on Microsoft Windows, then we'll encounter ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path.

The binary distribution of Apache Hadoop 2.2.0 release does not contain some windows native components (like winutils.exe, hadoop.dll etc). These are required (not optional) to run Hadoop on Windows.

So you need to build windows native binary distribution of hadoop from source codes following 'BUILD.txt' file located inside the source distribution of hadoop. You can follow the following posts as well for step by step guide with screen shot

If you face this problem when running a self-contained local application with Spark (i.e., after adding spark-assembly-x.x.x-hadoopx.x.x.jar or the Maven dependency to the project), a simpler solution would be to put winutils.exe (download from here) in 'C:winutilbin'. Then you can add winutils.exe to the hadoop home directory by adding the following line to the code:

Source: Click here

The statementjava.io.IOException: Could not locate executable nullbinwinutils.exe

explains that the null is received when expanding or replacing an Environment Variable. If you see the Source in Shell.Java in Common Package you will find that HADOOP_HOME variable is not getting set and you are receiving null in place of that and hence the error.

So, HADOOP_HOME needs to be set for this properly or the variable hadoop.home.dir property.

Hope this helps.

Thanks,Kamleshwar.

I just ran into this issue while working with Eclipse. In my case, I had the correct Hadoop version downloaded (hadoop-2.5.0-cdh5.3.0.tgz), I extracted the contents and placed it directly in my C drive. Then I went to

Eclipse->Debug/Run Configurations -> Environment (tab) -> and added

variable: HADOOP_HOME

Value: C:hadoop-2.5.0-cdh5.3.0

You can download winutils.exe here: http://public-repo-1.hortonworks.com/hdp-win-alpha/winutils.exe

Then copy it to your HADOOP_HOME/bin directory.

winutils.exe are required for hadoop to perform hadoop related commands. please download hadoop-common-2.2.0 zip file. winutils.exe can be found in bin folder. Extract the zip file and copy it in the local hadoop/bin folder.

I was facing the same problem. Removing the bin from the HADOOP_HOME path solved it for me. The path for HADOOP_HOME variable should look something like.

System restart may be needed. In my case, restarting the IDE was sufficient.

Set up HADOOP_HOME variable in windows to resolve the problem.

You can find answer in org/apache/hadoop/hadoop-common/2.2.0/hadoop-common-2.2.0-sources.jar!/org/apache/hadoop/util/Shell.java :

IOException from

HADOOP_HOME_DIR from

In Pyspark, to run local spark application using Pycharm use below lines

I was getting the same issue in windows. I fixed it by

- Downloading hadoop-common-2.2.0-bin-master from link.

- Create a user variable HADOOP_HOME in Environment variable and assign the path of hadoop-common bin directory as a value.

- You can verify it by running hadoop in cmd.

- Restart the IDE and Run it.

Download desired version of hadoop folder (Say if you are installing spark on Windows then hadoop version for which your spark is built for) from this link as zip.

Extract the zip to desired directory.You need to have directory of the form hadoopbin (explicitly create such hadoopbin directory structure if you want) with bin containing all the files contained in bin folder of the downloaded hadoop. This will contain many files such as hdfs.dll, hadoop.dll etc. in addition to winutil.exe.

Install Winutils

Now create environment variableHADOOP_HOME and set it to <path-to-hadoop-folder>hadoop. Then add;%HADOOP_HOME%bin; to PATH environment variable.

Open a 'new command prompt' and try rerunning your command.

- Download [winutils.exe]

From URL :

https://github.com/steveloughran/winutils/hadoop-version/bin - Past it under HADOOP_HOME/bin

Note : You should Set environmental variables:

User variable:

Variable: HADOOP_HOME

Value: Hadoop or spark dir

I used 'hbase-1.3.0' and 'hadoop-2.7.3' versions. Setting HADOOP_HOME environment variable and copying 'winutils.exe' file under HADOOP_HOME/bin folder solves the problem on a windows os.Attention to set HADOOP_HOME environment to the installation folder of hadoop(/bin folder is not necessary for these versions).Additionally I preferred using cross platform tool cygwin to settle linux os functionality (as possible as it can) because Hbase team recommend linux/unix env.

Winutils.exe is used for running the shell commands for SPARK. When you need to run the Spark without installing Hadoop, you need this file.

Steps are as follows:

Download the winutils.exe from following location for hadoop 2.7.1https://github.com/steveloughran/winutils/tree/master/hadoop-2.7.1/bin[NOTE: If you are using separate hadoop version then please download the winutils from corresponding hadoop version folder on GITHUB from the location as mentioned above.]

Now, create a folder 'winutils' in C: drive. Now create a folder 'bin' inside folder 'winutils' and copy the winutils.exe in that folder. So the location of winutils.exe will be C:winutilsbinwinutils.exe

Now, open environment variable and set HADOOP_HOME=C:winutil[NOTE: Please do not addbin in HADOOP_HOME and no need to set HADOOP_HOME in Path]

Your issue must be resolved !!